In the fast-evolving landscape of technology, cloud computing has emerged as a cornerstone, reshaping how businesses operate and IT professionals approach infrastructure. This blog, tailored for tech experts, delves into the intricacies of “Cloudonomics,” examining its key principles, benefits, challenges, and profound impact on the tech industry.

Understanding Cloudonomics

1. Defining Cloudonomics

Cloudonomics refers to the economic principles and trade-offs associated with cloud computing. It encompasses many factors, from cost optimization to performance enhancement, scalability, and resource allocation efficiency.

2. Economic Drivers of Cloud Adoption

In the ever-evolving landscape of technology, the adoption of cloud computing is not merely a technological shift but a strategic move rooted in economic considerations. The principles of Cloudonomics, focusing on the financial aspects of cloud adoption, play a pivotal role in shaping organizations’ decisions. This section delves into two crucial economic drivers of cloud adoption: Cost Efficiency and Scalability and Flexibility.

2.1 Cost Efficiency

2.1.1 Pay-as-You-Go Models

One of the foundational pillars of Cloudonomics is the emphasis on cost efficiency. Traditional on-premise IT infrastructure often involves significant upfront capital expenditures, from server hardware to networking equipment. Cloud computing introduces a paradigm shift by offering pay-as-you-go models. This approach allows organizations to pay for their computing resources, transforming capital expenses into operational expenses.

2.1.2 Minimizing Upfront Infrastructure Costs

Cloudonomics recognizes the financial advantage of minimizing upfront infrastructure costs. Organizations no longer need to invest heavily in hardware and data centers, reducing the financial burden of maintaining physical infrastructure. Instead, they can leverage the infrastructure provided by cloud service providers, paying only for the resources used.

2.1.3 Resource Optimization

Cloudonomics goes beyond mere cost reduction. It emphasizes resource optimization, ensuring that organizations utilize computing resources efficiently. Cloud platforms enable dynamic resource allocation through auto-scaling and load balancing, preventing over-provisioning and underutilization. This optimization increases cost savings and ensures organizations pay for their needed resources.

2.2 Scalability and Flexibility

2.2.1 Dynamic Resource Scaling

Scalability is a cornerstone of Cloudonomics. The ability to scale resources up or down based on demand is a paradigm shift from traditional IT infrastructure. Cloud platforms allow organizations to adjust their computing resources in real-time dynamically, ensuring optimal performance during peak demand and cost savings during periods of lower demand.

2.2.2 Meeting Varied Workloads

Cloudonomics recognizes that organizations often face fluctuating workloads. Scalability and flexibility in cloud computing enable seamless adaptation to varying workloads. Whether handling increased user traffic during a product launch or scaling down during periods of reduced activity, the cloud provides the agility needed to meet diverse business demands.

2.2.3 Cost-Effective Resource Utilization

The flexibility offered by cloud platforms extends to resource utilization. Organizations can select the specific type and amount of resources required for a given workload. This fine-grained control ensures that it helps match the workload’s needs and contributes to cost-effective utilization, eliminating the need to maintain excess capacity to handle occasional peaks.

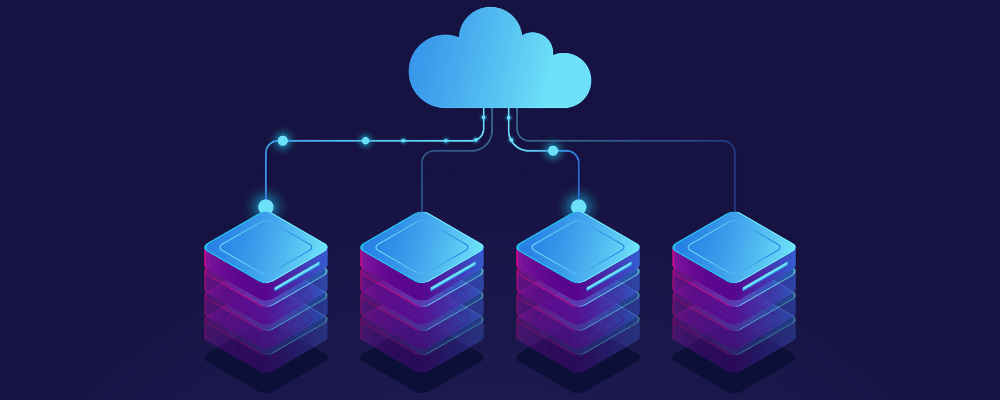

Key Components of Cloudonomics

3. Resource Management and Optimization

3.1 Virtualization

Efficient use of virtualization technologies plays a crucial role in Cloudonomics, enabling the creation of virtual instances to maximize hardware utilization.

3.2 Automation

Automated processes contribute to cost reduction and operational efficiency, allowing tech experts to focus on strategic tasks rather than routine management.